Sonatus, in collaboration with the AWS Generative AI Innovation Center, now leverages Amazon Bedrock to revolutionize AI-driven vehicle policy creation. This partnership dramatically reduces the time required to generate complex data collection and automation policies for Software-Defined Vehicles (SDVs), transforming days of manual work into minutes. The innovation addresses significant challenges OEMs face in managing vast vehicle data signals and automating functions.

OEM engineers traditionally struggle with thousands of vehicle signals when creating data collection policies for Sonatus Collector AI. Similarly, Automator AI’s no-code workflows for vehicle function automation prove challenging without deep signal knowledge. To overcome these hurdles, Sonatus and AWS developed a natural language interface. This interface uses generative AI to create policies, making the process accessible to both engineers and non-experts.

Advancing AI-driven Vehicle Policy with LLM Chains

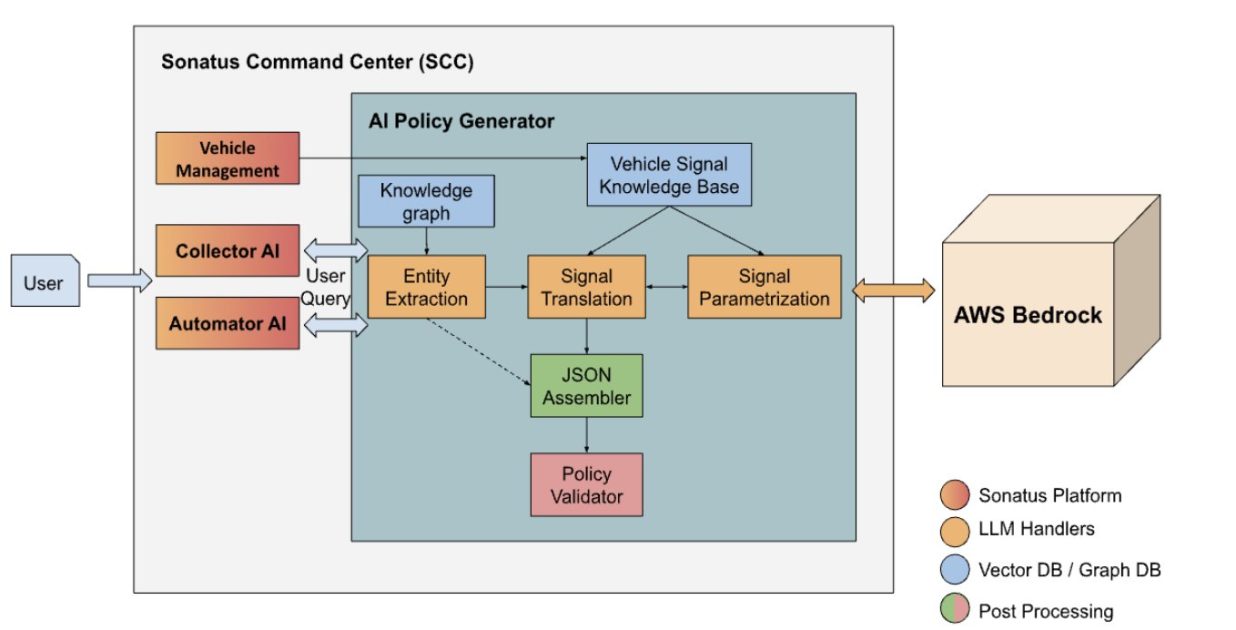

The new system employs a chain of large language models (LLMs) to automate policy generation. It begins with entity extraction, breaking down user natural language queries into structured XML. This process identifies triggers, target data, actions, and associated tasks for both Collector AI and Automator AI. Few-shot examples guide the LLMs in this initial step, ensuring accurate interpretation.

Subsequently, the system performs signal translation and parametrization. It identifies correct vehicle signals from the intermediate XML, converting them into the industry-standard Vehicle Signal Specification (VSS) format. A vector database stores preprocessed VSS signals and metadata. The system then uses vector-based lookups and reranking techniques to select the most relevant signals. A multi-agent approach, featuring a ReasoningAgent and a JudgeAgent, iteratively refines signal identification, improving accuracy and resolving ambiguities, such as distinguishing between engine and cabin cooling signals.

The solution integrates several key enhancements. Task merging resolves incomplete information in adjacent tasks within automator policies, streamlining workflows. Reduced redundant LLM calls, achieved by merging prompts and utilizing Anthropic’s Claude 3 Haiku, significantly lower latency. Furthermore, context-driven policy generation, incorporating knowledge base lookups and user clarification, ensures greater precision in signal selection. This comprehensive approach delivers a highly efficient and accurate AI-driven vehicle policy creation system.